Oscar Prediction Shake-Up? Nate Silver Given New Game-Changing Role By ABC

UPDATED WITH MORE DETAILS: The Academy Awards are important in and around Hollywood for primarily three reasons: the nominations which bring audiences into theaters as a movie marketing tool, the lucrative ‘For Your Consideration’ ads they generate, and the global telecast announcing winners so everybody can bask in their reflected glory. Now ABC is trying to corner the market on all with one move. Not only does the network broadcast the Oscars but its news division is guaranteeing data guru Nate Silver a role. How much of a Hollywood game-changer will this become? Not much of one judging from how little attention his movie awards prognostication has garnered in the past. Twice before, in 2009 and 2011, he sought to predict the Academy Award winners in 6 major categories based on a “mix of statistical factors”. His track record was 9 correct picks in 12 tries, for a 75% success rate. “Not bad, but also not good enough to suggest that there is any magic formula for this,” he wrote. For the 6 marquee categories he hadicapped in 2013, he was correct only for sure-things and missed the 2 that were more complex to predict. Meh.

I’ve been pondering this news scooped by Politico’s Mike Allen about all the inducements ESPN/ABC News gave the 35-year-old to leave The New York Times, including extensive air time, a digital empire, and inclusion in the Oscars. A lot of showbiz websites and blogs large and small, smart and smarmy, clued-in and clueless, depend on their Oscar prognostication to drive traffic and foot bills. But unless Silver allows for the myriad variables that go into Academy Award noms and wins – insider stuff that Deadline knows from covering movie awards season in-depth – he won’t become more accurate.

For instance: Who’s popular, deserving, and appropriately humble enough to get nominated? Which film’s director is considered a douchebag whom nobody wants to win? What studio did a lousy job campaigning for the Academy Awards? How badly is Harvey Weinstein badmouthing the competition? I’ve always said that most Oscar voters are not just geriatric and cranky but also jealous and vengeful. Whether Silver’s statistical model can take into account those indiosyncracies and also cover more Oscar categories than just 6 is yet to be seen. But I’ll bet on Deadline’s own awards columnist Pete Hammond to beat Silver’s prognostications in 2014.

Obviously, the annual Academy Awards process isn’t as big a deal as U.S. national election campaigns. But interesting to note that Silver’s FiveThirtyEight blog was driving 20% of all traffic to the NYT as the last election electrified. That’s because in 2012 he correctly predicted the winners of all 50 states, in 2008 the winners of 49 out of 50 states, and the winners of all 35 U.S. Senate races that year as well. What ESPN/ABC offered was to return Silver to his flagship FiveThirtyEight.com and put him on air at ESPN and ABC, and develop verticals on a variety of new topics. And now he’ll work for the TV home of the Oscars. Odds are certain that Silver’s blog now will become one of the go-to places for Oscar dollars. But not for accuracy.

Can Silver truly become a trusted player in this showbiz space? Maybe. But he’ll have to do a lot better. Of course, if he’s wrong his first time out after being hyped way more than in the past, he’ll be laughed out of the biz. First, he has to stop relying on all the other film awards each year. They simply don’t matter. It might help if the Academy Of Motion Picture Arts & Sciences hands Silver its list of voters. Considering that AMPAS and ABC are joined at the hip because their broadcast pact goes at least through 2020, that’s doable. Whether or not the membership will resent having their privacy violated or participating in any polling is an impending challenge. Certainly the Academy over the years has discouraged voters from cooperating with any prediction schemes.

So what methodology will Silver use? As best as I can understand it (and, please remember that I’m mathematically challenged), it’s a so-called ’educated and calculated estimation’ stemming from his reliance on statistics and study of performance, economics, and metrics. This guy first developed the Elo rating for Major League baseball, a system that calculates the relative skill levels of players. He then developed his PECOTA system for projecting performance and careers and sold it. His FiveThirtyEight is a self-created political polling aggregation website (which took its name from the number of electors in the U.S. Electoral College) using a calculated model. He needs to better adapt that to the Oscars instead of just relying on other awards shows.

Here’s what Silver wrote about his Oscar predictions in 2013:

This year, I have sought to simplify the method, making the link to the FiveThirtyEight election forecasts more explicit. This approach won’t be foolproof either, but it should make the philosophy behind the method more apparent. The Oscars, in which the voting franchise is limited to the 6,000 members of the Academy of Motion Picture Arts and Sciences, are not exactly a democratic process. But they provide for plenty of parallels to political campaigns.

In each case, there are different constituencies, like the 15 branches of the Academy (like actors, producers and directors) that vote for the awards. There is plenty of lobbying from the studios, which invest millions in the hopes that an Oscar win will extend the life of their films at the box office. And there are precursors for how the elections will turn out: polls in the case of presidential races, and for the Oscars, the litany of other film awards that precede them.

So our method will now look solely at the other awards that were given out in the run-up to the Oscars: the closest equivalent to pre-election polls. These have always been the best predictors of Oscar success. In fact, I have grown wary that methods that seek to account for a more complex array of factors are picking up on a lot of spurious correlations and identifying more noise than signal. If a film is the cinematic equivalent of Tim Pawlenty — something that looks like a contender in the abstract, but which isn’t picking up much support from actual voters — we should be skeptical that it would suddenly turn things around.

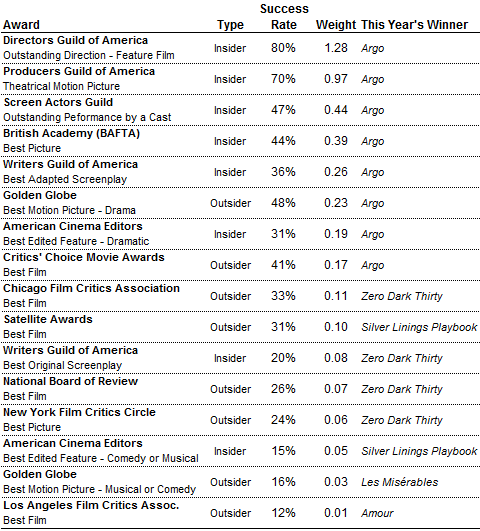

Just as our election forecasts assign more weight to certain polls, we do not treat all awards equally. Instead, some awards have a strong track record of picking the Oscar winners in their categories, whereas others almost never get the answer right (here’s looking at you, Los Angeles Film Critics Association).

These patterns aren’t random: instead, the main reason that some awards perform better is because some of them are voted on by people who will also vote for the Oscars. For instance, many members of the Screen Actors Guild will vote both for the SAG Awards and for the Oscars. In contrast to these “insider” awards are those like the Golden Globes, which are voted upon by “outsiders” like journalists or critics; these tend to be less reliable.

Let me show you how this works in the case of the Best Picture nominees. There are a total of 16 awards in my database, not counting the Oscars, that are given out for Best Picture or that otherwise represent the highest merit that a voting group can bestow on a film. (For instance, the Producers Guild Awards are technically given out to the producers of a film rather than the film itself, but they nevertheless serve as useful Best Picture precursors.) In each case, I have recorded how often the award recipient has corresponded with the Oscar winner over the last 25 years (or going back as far as possible if the award hasn’t been around that long).

The best performance has come from the Directors Guild of America. Their award for Outstanding Direction in a Feature Film has corresponded with the Academy Award for Best Picture a full 80 percent of the time. (Keep in mind that Best Picture and Best Director winners rarely differ from one another — although this year, as you will see, is very likely to be an exception.) The Producers Guild awards are the next most accurate; their award for best production in a feature film has a 70% success rate in calling the Academy’s Best Picture winner. Directors and producers are the movers and shakers in Hollywood, and any evidence about their opinions ought to count for a lot – as it does in our system.

By contrast, the Golden Globe for best dramatic motion picture has only matched with the Oscar winner about half the time. And some of the awards given out by critics do much worse than this: the Los Angeles Film Critics Association’s Best Film has matched the Oscar only 12 percent of the time, for example. Our formula, therefore, leans very heavily on the “insider” awards. (The gory details: I weight each award based on the square of its historical success rate, and then double the score for awards whose voting memberships overlap significantly with the Academy.)

Ideally, we would want to look not only which films win which the awards, but also how close the voting was (just as it is extremely helpful to look at the margin separating the candidates in a political poll). Unfortunately, none of the awards publish this information, so I instead I give partial credit (one-fifth of a point) to each film that was nominated for a given award.

The short version: our forecasts for the Academy Awards are based on which candidates have won other awards in their category. We give more weight to awards that have frequently corresponded with the Oscar winners in the past, and which are voted on by people who will also vote for the Oscars. We don’t consider any statistical factors beyond that, and we doubt that doing so would provide all that much insight.

(First version posted at 3 AM)

Related stories

OSCARS: Studio-by-Studio Look At This Year’s Best Picture Hopefuls

UPDATE: Billy Crystal-Hosted Oscars Watched By 39.3 Million, Up From Last Year

OSCARS: Picks For Acting Categories

Get more from Deadline.com: Follow us on Twitter, Facebook, Newsletter